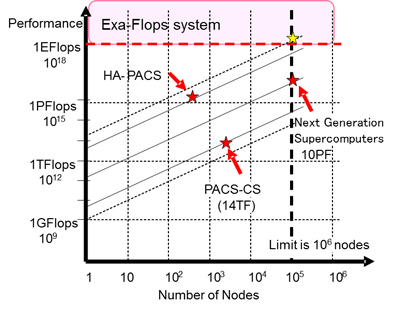

Performance of parallel supercomputing system is represented by performance of node (processor) x number of nodes. So far, pursued to improve performance by increasing the number of nodes. However, because of higher power consumption and failure rate, this approach has becoming more difficult(weak-scaling).

To achieve Exa-Scale(which is beyond Peta-Scale), it is necessary to

– establish fault tolerant technology in case of more than 100,000 nodes.

– accomplish 10TFlops performance in a single node.

Accelerators enable to improve performance, and reduce the simulation time per step (strong-scaling).

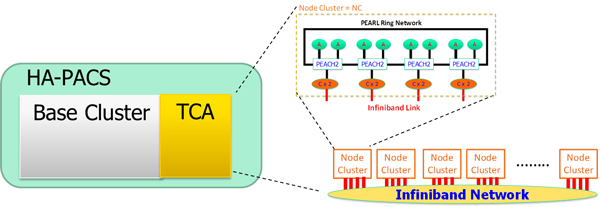

Project Office for Exascale Computing System Development has been developing a scale of one Peta-Flops system, with Tightly Coupled Accelerators and HA-PACS, to perform demonstration for the Exa-Flops system.

Performance Target:

Base Cluster: 268 nodes x 3 TFlops(GPU 2660 GFlops+CPU 332.8 GFlops) = 802 TFlops

TCA: more than 200 TFlops

Total: more than 1 PFlops

Computational Node

GPGPU(GPU) is originally used for graphics processing, and now used as a mechanism for accelerating scientific and technical computing. In recent years, due to the rapid advances in semiconductor and mounting many computing units, performance has improved significantly.

HA-PACS Project is considering GPU and GRAPE-DR(developed by National Astronomical Observatory of Japan) as accelerators.

Tightly Coupled Accelerators HA-PACS/TCA

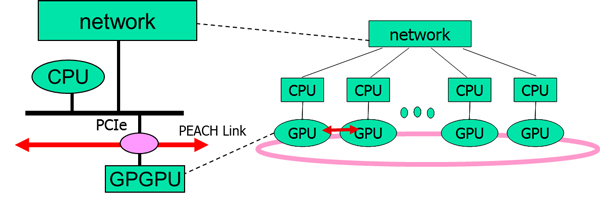

To solve the I/O bandwidth bottleneck causing serious performance degradation, TCA(Tightly Coupled Accelerators) enables direct communication among multiple GPUs over computation nodes.

PEARL

PEARL(PCI-Express Adaptive and Reliable Link), which enables direct communication among GPUs, enriches bandwidth and reduces the latency of communication.

PEARL with PEACH2(PCI- Express Adaptive Communication Hub 2) chip and board have been developing. PEACH2 is implemented on FPGA(Field Programmable Gate Array) for flexible programming on communication control.

PEARL can produce the following benefits.

– Autonomous inter-GPU communication is possible -> High performance in parallel for accelerating code and generic code

– Able to copy data directly between the GPU memory -> High-speed parallel processing with GPU only

– Direct I/O among GPU memory -> Reducing the overhead of fault-tolerant, etc

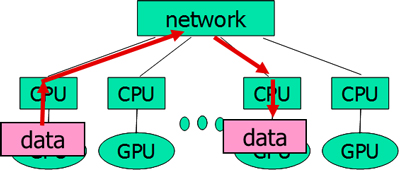

Without PEARL, problems below will happen.

– CPU controls coupling and communication between GPU -> Dependent on CPU control, the efficiency loss (Example: Communication of GPU, while CPU is controlling I/O. )

– Up to 3 steps are necessary to copy data between GPU memory -> Significantly lower efficiency of parallel processing (Peak performance of data transfer declines to one third)

– Communication between GPU and HDD is impossible without through CPU